DATASET ID# 2023-GESTURES-BAW

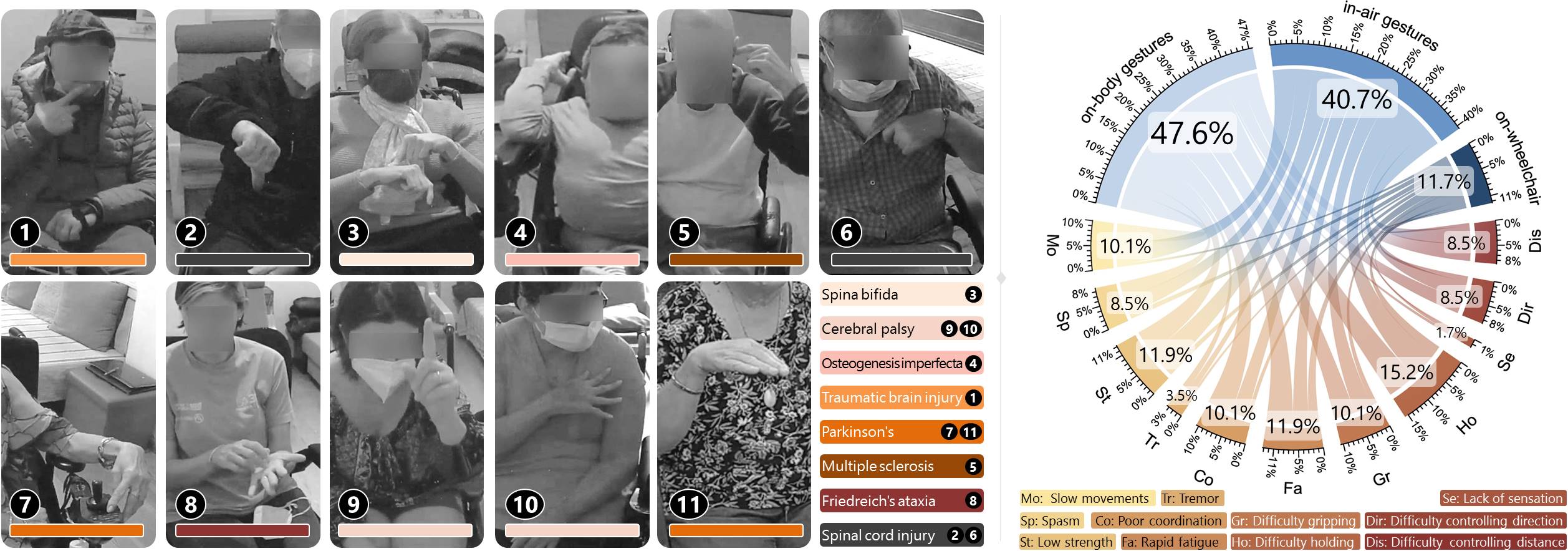

A dataset is provided with 231 gestures elicited

from 11 wheelchair users with various motor impairments, who proposed on-body, in-air,

and on-wheelchair gestures to effect 21 referents representing common actions,

types of digital content, and navigation commands for interactive systems.

The dataset represents a companion resource for Bilius et al. (2023a), who analyzed participants' gesture proposals in relation to their self-reported motor impairments, e.g., low strength, rapid fatigue, tremor, etc. The paper highlighted the need for personalized gesture sets, tailored to and reflective of both wheelchair users' preferences and specific motor abilities. In a follow-up work, Bilius et al. (2023b), we examined the existence of a potential tradeoff between the expressivity of iconic and metaphoric gestures, less represented in our dataset, for the low complexity of simple pointing movements performed from the wheelchair space.

The dataset represents a companion resource for Bilius et al. (2023a), who analyzed participants' gesture proposals in relation to their self-reported motor impairments, e.g., low strength, rapid fatigue, tremor, etc. The paper highlighted the need for personalized gesture sets, tailored to and reflective of both wheelchair users' preferences and specific motor abilities. In a follow-up work, Bilius et al. (2023b), we examined the existence of a potential tradeoff between the expressivity of iconic and metaphoric gestures, less represented in our dataset, for the low complexity of simple pointing movements performed from the wheelchair space.

Resources

We release our gesture dataset to encourage further studies and developments on gestures

performed from the space of the wheelchair. The dataset includes:

- text descriptions of the participants' gestures, formatted as a CSV file

- description of participants' motor symptoms

- R code that reads the dataset and various gesture measures reported in the paper.

If you find our datasets and/or code useful in your work, please let us know. If you use our dataset and/or code in scientific publications, please reference the papers Bilius et al. (2023a) and Bilius et al. (2023b) that introduced these resources.