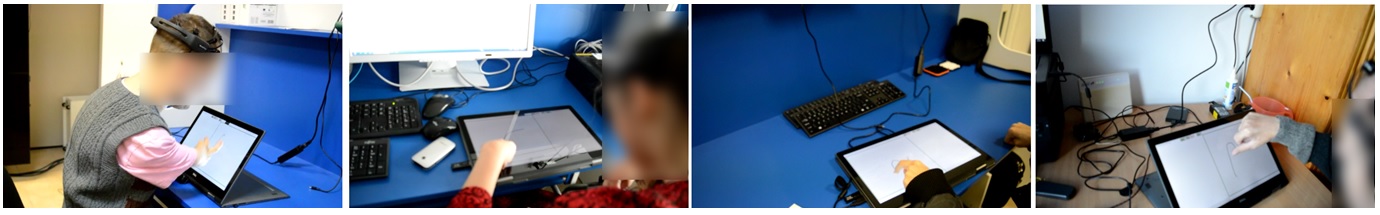

People with upper body motor impairments (see image below, left) encounter many challenges when employing

one of today's dominant forms of interaction with modern computing technology,

, touch and gesture input,

and, unlike people without motor impairments (image on the right), they need to adopt workaround strategies to be able to access content on touchscreen devices.

In this project, we design and develop new technology to assist people with motor impairments to use gesture input on touchscreen devices effectively

by employing eye gaze tracking, voice input, and electroencephalography (EEG) analysis.

- Radu-Daniel Vatavu, Ovidiu-Ciprian Ungurean. (2019).

Stroke-Gesture Input for People with Motor Impairments: Empirical Results & Research Roadmap.

In Proceedings of CHI '19, the 37th ACM Conference on Human Factors in Computing Systems (Glasgow, Scotland, UK, May 2019). ACM, New York, NY, USA, Paper No. 215, 14 pages

ACCEPTANCE RATE: 23.8% (703/2958) | ARC A* (CORE 2018)

PDF |

DOI |

ISI WOS:000474467902066

|

- Maria-Doina Schipor. (2019). Attitude and Self-Efficacy of Students with Motor Impairments Regarding Touch Input Technology.

Revista Romaneasca pentru Educatie Multidimensionala, 11(1). Lumen Publishing, 177-186

PDF |

DOI |

ISI WOS:000460769100013

|

- Radu-Daniel Vatavu, Lisa Anthony, Jacob O. Wobbrock. (2018). $Q: A Super-Quick, Articulation-Invariant Stroke-Gesture Recognizer for Low-Resource Devices.

In Proceedings of MobileHCI '18, the 20th ACM International Conference on Human-Computer Interaction with Mobile Devices and Services (Barcelona, Spain, September 2018). ACM, New York, NY, USA, Article No. 23

ACCEPTANCE RATE: 23.1% (50/216) | ARC B (CORE 2018)

PDF |

DOI

HONORABLE MENTION AWARD

|

- Luis A. Leiva, Daniel Martin-Albo, Radu-Daniel Vatavu. (2018). GATO: Predicting Human Performance with Multistroke and Multitouch Gesture Input.

In Proceedings of MobileHCI '18, the 20th ACM International Conference on Human-Computer Interaction with Mobile Devices and Services (Barcelona, Spain, September 2018). ACM, New York, NY, USA, Article No. 32

ACCEPTANCE RATE: 23.1% (50/216) | ARC B (CORE 2018)

PDF |

DOI

|

- Ovidiu-Ciprian Ungurean, Radu-Daniel Vatavu, Luis A. Leiva, and Daniel Martín-Albo. (2018). Predicting stroke gesture input performance for users with motor impairments.

In Proceedings of MobileHCI '18 Adjunct, the 20th ACM International Conference on Human-Computer Interaction with Mobile Devices and Services (Barcelona, Spain, September 2018). ACM, New York, NY, USA, 23-30

ACCEPTANCE RATE: % (/) | ARC B (CORE 2018)

PDF |

DOI

|

- Bogdan-Florin Gheran, Ovidiu-Ciprian Ungurean, Radu-Daniel Vatavu. (2018). Toward Smart Rings as Assistive Devices for People with Motor Impairments: A Position Paper.

In Proceedings of RoCHI '18, 15th International Conference on Human-Computer Interaction (Cluj-Napoca, Romania, September 2018). Matrix Rom, 99-106

PDF |

DOI

|

- Bogdan-Florin Gheran, Jean Vanderdonckt, Radu-Daniel Vatavu. (2018). Gestures for Smart Rings: Empirical Results, Insights, and Design Implications.

In Proceedings of DIS '18, the 13th ACM Conference on Designing Interactive Systems (Hong Kong, China, June 2018). ACM, New York, NY, USA, 623-635

ACCEPTANCE RATE: 22.0% (107/487) | ARC B (CORE 2018)

PDF |

DOI

|

- Bogdan-Florin Gheran, Radu-Daniel Vatavu, Jean Vanderdonckt. (2018). Ring x2: Designing Gestures for Smart Rings using Temporal Calculus.

In Proceedings of the 2018 ACM Conference Companion Publication on Designing Interactive Systems (Hong Kong, China, June 2018). ACM, New York, NY, USA, 117-122

ACCEPTANCE RATE: 46.7% (50/107) | ARC B (CORE 2018)

PDF |

DOI

ISI WOS:000461155200020

|

- Luis A. Leiva, Daniel Martín-Albo, Réjean Plamondon, Radu-Daniel Vatavu. (2018). KeyTime: Super-Accurate Prediction of Stroke Gesture Production Times.

In Proceedings of CHI '18, the 36th ACM Conference on Human Factors in Computing Systems. (Montreal, Canada, April 2018). ACM, New York, NY, USA, Paper 239, 12 pages

ACCEPTANCE RATE: 25.7% (666/2592) | ARC A* (CORE 2018)

PDF |

DOI

|

- Ovidiu-Ciprian Ungurean, Radu-Daniel Vatavu, Luis A. Leiva, Réjean Plamondon. (2018). Gesture Input for Users with Motor Impairments on Touchscreens: Empirical Results based on the Kinematic Theory.

In Proceedings of CHI EA '18, the 36th ACM Conference Extended Abstracts on Human Factors in Computing Systems. (Montreal, Canada, April 2018). ACM, New York, NY, USA, Paper LBW537, 6 pages

ACCEPTANCE RATE: 39.8% (255/641) | ARC A* (CORE 2018)

PDF |

DOI

|

- Ovidiu-Ionut Gherman, Ovidiu-Andrei Schipor, Bogdan-Florin Gheran. (2018). VErGE: A system for collecting voice, eye gaze, gesture, and EEG data for experimental studies.

In Proceedings of DAS '18, the 14th International Conference on Development and Application Systems (Suceava, Romania, May 2018). IEEE, 150-155

PDF |

DOI

| ISI WOS:000467080400028

|

- Petru-Vasile Cioata, Radu-Daniel Vatavu. (2018). In Tandem: Exploring Interactive Opportunities for Dual Input and Output on Two Smartwatches.

In Proceedings of IUI '18 Companion, the 23rd International Conference on Intelligent User Interfaces Companion (Tokyo, Japan, March 2018). ACM, New York, NY, USA, Article 60

PDF |

DOI

ISI WOS:000458680100060

|

- Radu-Daniel Vatavu. (2017). Characterizing Gesture Knowledge Transfer Across Multiple Contexts of Use.

Journal on Multimodal User Interfaces 11 (4). Springer, 301-314

PDF |

DOI |

ISI WOS:000417622600001

IF: 1.031 | 5-Year IF: 1.039 (JCR 2016)

|