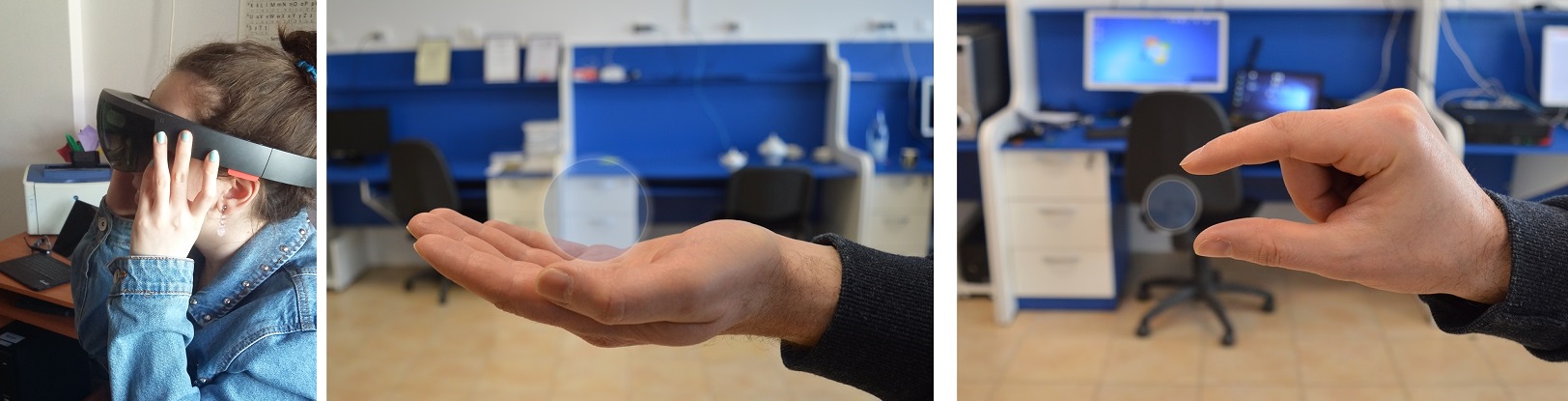

In this project, we want to develop new interactive technology to deliver a better understanding

of the visual world to people with visual impairments, but also to people with normal vision,

who may find themselves in life situations where sensory augmentation could help avoid unwanted outcomes,

, the ability to see clearer, closer or farther away than allowed by the naked eye,

or to receive sensory feedback about other events when visual attention is overwhelmed. To this end,

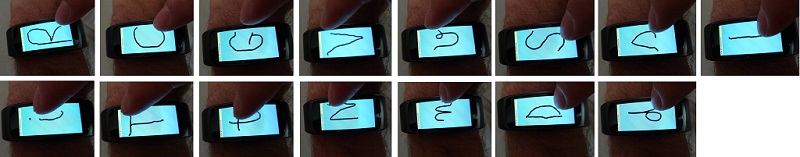

we plan to understand and leverage the possibilities offered by smart wearables,

,

smart glasses, smart watches, and others, to enhance visual perception for people with and

without visual impairments.

The goal of this project is to design, implement, and evaluate new prototypes and interaction techniques

for wearable devices to support vision augmentation for users with low vision as well as for users

without visual impairments. Our concrete objectives are:

-

Ovidiu-Andrei Schipor, Radu-Daniel Vatavu. (2018).

Invisible, Inaudible, and Impalpable: Users’ Preferences and Memory Performance for Digital Content in Thin Air.

IEEE Pervasive Computing 17(4), 76-85. IEEE Press

PDF |

DOI |

IF: 3.813, 5-Year IF: 4.123 (JCR 2018) |

WOS:000457920900009 |

NOMINATED FOR THE 2020 AWARDS OF THE ROMANIAN ACADEMY

|

-

Jean Vanderdonckt, Mathieu Zen, Radu-Daniel Vatavu. (2019).

AB4Web: An On-Line A/B Tester for Comparing User Interface Design Alternatives.

Proceedings of the ACM on Human-Computer Interaction 3 (EICS), Article 18 (June 2019), 28 pages. ACM, New York, NY, USA

PDF |

DOI |

ACCEPTANCE RATE: 33.3% (21/63) |

ACM SIGCHI HONORABLE MENTION AWARD

|

-

Adrian Aiordachioae, Radu-Daniel Vatavu. (2019).

Life-Tags: A Smartglasses-based System for Recording and Abstracting Life with Tag Clouds.

Proceedings of the ACM on Human-Computer Interaction 3 (EICS), Article 11 (June 2019), 24 pages. ACM, New York, NY, USA

PDF |

DOI |

ACCEPTANCE RATE: 33.3% (21/63)

|

-

Radu-Daniel Vatavu. (2020).

Connecting Research from Assistive Vision and Smart Eyewear Computing with Crisis Management and Mitigation Systems: A Position Paper.

Romanian Journal of Information Science and Technology 23(S), S29–S39. Romanian Academy

PDF |

DOI |

IF: 0.485, 5-Year IF: 0.486 (JCR 2019) |

WOS: 000537095200004 |

ACCEPTANCE RATE: 30.0% (9/30)

|

-

Cristian Pamparau, Radu-Daniel Vatavu. (2021).

FlexiSee: Flexible Configuration, Customization, and Control of Mediated and Augmented Vision for Users of Smart Eyewear Devices.

Multimedia Tools and Applications 80, 30943–30968. Springer

PDF |

DOI |

IF: 2.757, 5-Year IF: 2.517 (JCR 2020) |

WOS: 000604203000002

|

-

Radu-Daniel Vatavu, Petruta-Paraschiva Rusu, Ovidiu-Andrei Schipor. (2022).

Preferences of People with Visual Impairments for Augmented and Mediated Vision: A Vignette Experiment.

Multimedia Tools and Applications, 26 pages. Springer

PDF |

DOI |

IF: 2.757, 5-Year IF: 2.517 (JCR 2020)

|

-

Adrian Aiordachioae, Cristian Pamparau, Radu-Daniel Vatavu. (2022).

Lifelogging Meets Alternate and Cross-Realities: An Investigation into Broadcasting Personal Visual Realities to Remote Audiences.

Multimedia Tools and Applications, 24 pages. Springer

PDF |

DOI |

IF: 2.757, 5-Year IF: 2.517 (JCR 2020)

|

-

Adrian Aiordachioae, David Gherasim, Alexandru-Ilie Maciuc, Bogdan-Florin Gheran, Radu-Daniel Vatavu. (2020).

Addressing Inattentional Blindness with Smart Eyewear and Vibrotactile Feedback on the Finger, Wrist, and Forearm.

Proceedings of MUM '20, the 19th ACM International Conference on Mobile and Ubiquitous Multimedia. ACM, New York, NY, USA, 329-331

DOI |

ARC B venue (ARC CORE 2021)

|

-

Adrian Aiordachioae, Daniel Furtuna, and Radu-Daniel Vatavu. (2020).

Aggregating Life Tags for Opportunistic Crowdsensing with Mobile and Smartglasses Users.

Proceedings of GoodTechs '20, the 6th EAI International Conference on Smart Objects and Technologies for Social Good. ACM, New York, NY, USA, 66-71

PDF |

DOI |

WOS:FORTHCOMING

|

-

Radu-Daniel Vatavu. (2020).

Quantifying the Consistency of Gesture Articulation for Users with Low Vision with the Dissimilarity-Consensus Method.

Proceedings of MobileHCI '20 Extended Abstracts, the 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services. ACM, New York, NY, USA

PDF |

DOI |

ARC B venue (ARC CORE 2018)

|

-

Radu-Daniel Vatavu, Jean Vanderdonckt. (2020).

What Gestures Do Users with Visual Impairments Prefer to Interact with Smart Devices? And How Much We Know About It.

Proceedings of DIS '20 Companion, the 15th ACM Conference on Designing Interactive Systems (Eindhoven, The Netherlands). ACM, New York, NY, USA

PDF |

DOI |

ARC B venue (ARC CORE 2018) |

ACCEPTANCE RATE: 50/199 = 25.1%

|

-

Radu-Daniel Vatavu, Pejman Saeghe, Teresa Chambel, Vinoba Vinayagamoorthy, Marian Florin Ursu. (2020).

Conceptualizing Augmented Reality Television for the Living Room.

Proceedings of IMX '20, the ACM International Conference on Interactive Media Experiences (Barcelona, Spain). ACM, New York, NY, USA

PDF |

DOI |

ACCEPTANCE RATE: 26% (13/50) |

HONORABLE MENTION AWARD

|

-

Ovidiu-Andrei Schipor, Adrian Aiordachioae. (2020).

Engineering Details of a Smartglasses Application for Users with Visual Impairments.

Proceedings of DAS 2020, the 15th International Conference on Development and Application Systems (Suceava, Romania).

IEEE, 157-161

PDF |

DOI |

WOS:000589776100029

|

-

Adrian Aiordachioae, Ovidiu-Andrei Schipor, Radu-Daniel Vatavu. (2020).

An Inventory of Voice Input Commands for Users with Visual Impairments and Assistive Smartglasses Applications.

Proceedings of DAS 2020, the 15th International Conference on Development and Application Systems (Suceava, Romania).

IEEE, 146-150

PDF |

DOI |

WOS:000589776100027

|

-

Irina Popovici, Radu-Daniel Vatavu. (2019).

Understanding Users' Preferences for Augmented Reality Television.

Proceedings of ISMAR 2019, the 18th IEEE International Symposium on Mixed and Augmented Reality (Beijing, China).

IEEE, 397-406

PDF |

DOI |

ACCEPTANCE RATE: 22.1% (36/163) |

ARC A* venue (ARC CORE 2018) |

WOS:000525841300027

|

-

Petruta-Paraschiva Rusu, Maria-Doina Schipor, Radu-Daniel Vatavu. (2019).

A Lead-In Study on Well-Being, Visual Functioning, and Desires for Augmented Reality Assisted Vision for People with Visual Impairments.

Proceedings of EHB '19, the 7th IEEE International Conference on e-Health and Bioengineering (Iasi, Romania).

IEEE

PDF |

DOI |

WOS:000558648300203

|

-

Adrian Aiordachioae. (2019).

Eyewear-Based System for Sharing First-Person Video to Remote Viewers.

Proceedings of EHB '19, the 7th IEEE International Conference on e-Health and Bioengineering (Iasi, Romania).

IEEE

PDF |

DOI |

WOS:000558648300003

|

-

Nathan Magrofuoco, Paolo Roselli, Jean Vanderdonckt, Jorge Luis Pérez-Medina, Radu-Daniel Vatavu. (2019).

GestMan: A Cloud-based Tool for Stroke-Gesture Datasets.

Proceedings of EICS '19, the 11th ACM SIGCHI Symposium on Engineering Interactive Computing Systems (Valencia, Spain), Article 7, 6 pages. ACM, New York, NY, USA

PDF |

DOI |

WOS:000524581600007 |

BEST TECH NOTE AWARD

|

-

Irina Popovici, Radu-Daniel Vatavu. (2019).

Towards Visual Augmentation of the Television Watching Experience: Manifesto and Agenda.

In Proceedings of TVX '19, the 2019 ACM International Conference on Interactive Experiences for TV and Online Video (Manchester, UK), 199-204. ACM, New York, NY, USA

PDF |

DOI |

WOS:000482136600020

|

-

Radu-Daniel Vatavu. (2019).

The Dissimilarity-Consensus Approach to Agreement Analysis in Gesture Elicitation Studies.

In Proceedings of CHI '19, the 37th ACM Conference on Human Factors in Computing Systems (Glasgow, Scotland, UK), Paper No. 224, 14 pages. ACM, New York, NY, USA

PDF |

DOI |

ACCEPTANCE RATE: 23.8% (703/2958) |

ARC A* venue (ARC CORE 2018) |

WOS:000474467902075

|