Sapiens-in-XR

Operationalizing Interaction-Attention in Extended Reality

Cristian Pamparău, MintViz Lab, Stefan cel Mare University of Suceava, Romania

Ovidiu-Andrei Schipor, MintViz Lab, Stefan cel Mare University of Suceava, Romania

Alexandru Dancu, MintViz Lab, Stefan cel Mare University of Suceava, Romania

Radu-Daniel Vatavu, MintViz Lab, Stefan cel Mare University of Suceava, Romania [contact]

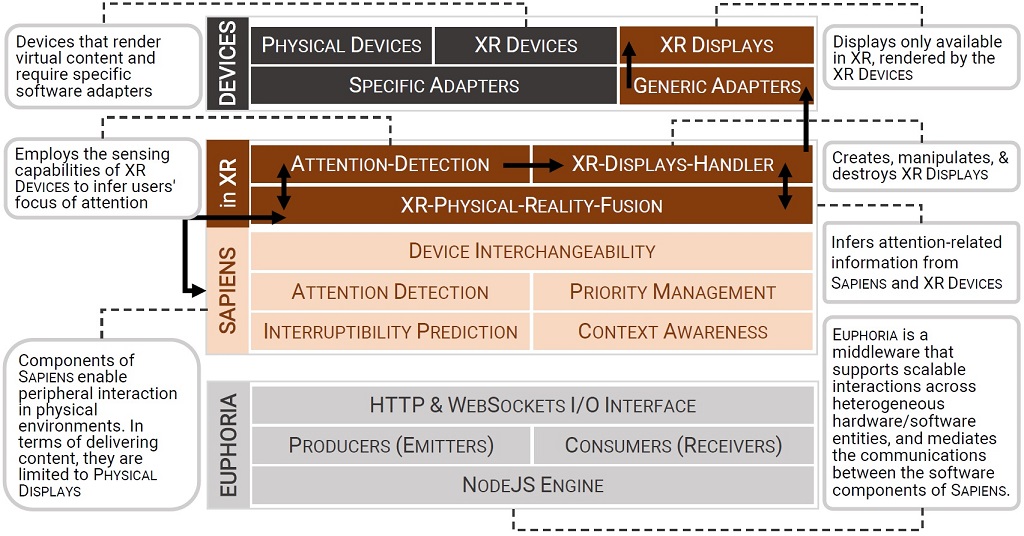

SAPIENS-in-XR is a software architecture built on top of SAPIENS (Schipor et al., 2019)

with the goal to support design and engineering of interactive systems that implement peripheral input in eXtended Reality environments.

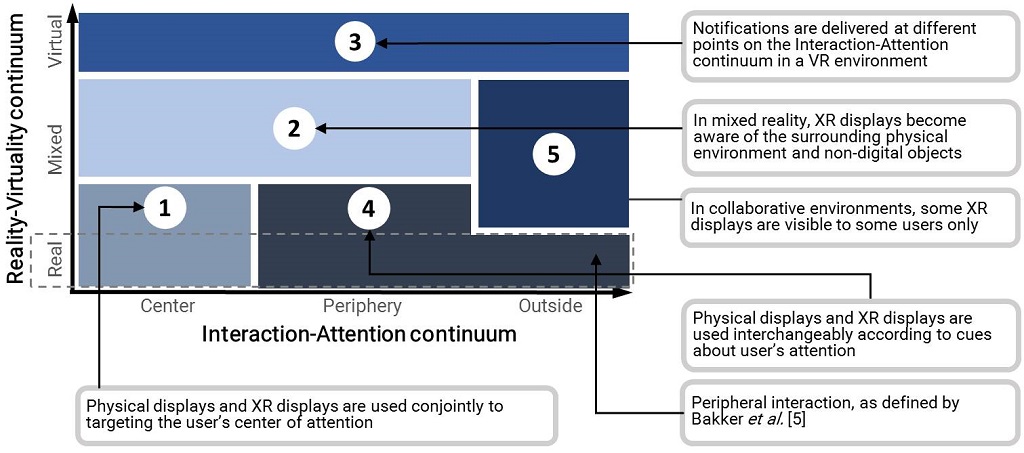

SAPIENS-in-XR operates with a conceptual space with two dimensions,

Interaction-Attention (Bakker and Niemantsverdriet, 2016)

and Reality-Virtuality (Milgram et al., 1995),

and formalizes the notion of "XR displays" to expand the application range of ambient media displays from physical environments to XR.

Ovidiu-Andrei Schipor, MintViz Lab, Stefan cel Mare University of Suceava, Romania

Alexandru Dancu, MintViz Lab, Stefan cel Mare University of Suceava, Romania

Radu-Daniel Vatavu, MintViz Lab, Stefan cel Mare University of Suceava, Romania [contact]

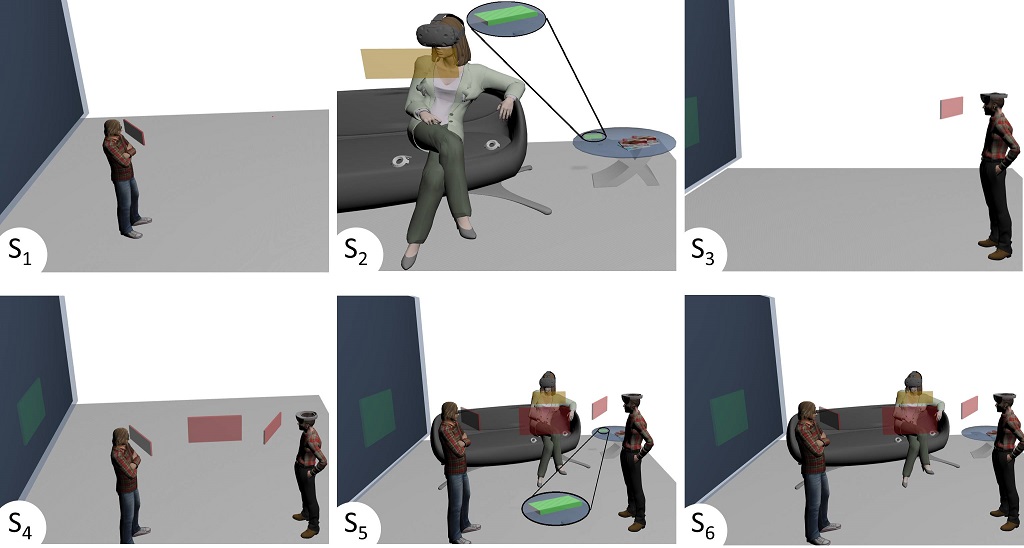

XR displays are a specific category of virtual objects rendered in XR environments that feature Itoh et al.'s (2022) spatial and visual realism, Lantz's (2007) multiscale and multiuser characteristics, and build on top of Grubel et al.'s (2021) criteria for augmentable user screens. To these characteristics, we add two more that operationalize XR displays from the perspective of software architecture for peripheral interactions, as follows: (i) the properties (e.g., size, location, orientation, etc.) of a XR display can be dynamically changed during and following events from the physical-virtual environment and user interaction, and (ii) XR displays can be created and destroyed on-the-fly in the XR environment. Property (i) specifies the high exibility of a XR display in contrast to its physical counterpart, while property (iv) acknowledges the heterogeneity of application needs for smart environments with multiple content sources and I/O devices that demand user attention. According to this definition, XR displays exist at the intersection of the physical and the virtual with convenient characteristics that make them transition fluently, in terms of form factor and behavior, between the center and periphery of user attention.

Sapiens-in-XR introduces new software components specific to XR:

- XR-Physical-Reality-Fusion is a software middleware that merges information from both physical and XR displays, designed to behave as an abstract interface to I/O devices.

- The Attention-Detection software component supersedes the one from SAPIENS to address aspects of user attention towards entities from the XR environment. For instance, HMDs that render virtual content integrate eye gaze and head trackers, hand and gesture detectors, and speech recognition. This information is fed into the Attention-Detection component to complement the data collected by the previous version from the Sapiens architecture.

- The Devices layer specifies physical devices, e.g., a wall display from the physical environment or the user's smartwatch and, as a particular subcategory, XR devices, e.g., a pair of smartglasses for AR, next to XR displays as they were formalized in Section 3. While physical devices are tightly coupled with a particular adapter (platform, operating system, communication protocol, API, etc.), the latter represent software objects that expose generic interfaces. Nevertheless, the nature of the display, either physical or virtual, is transparent to the business logic of SAPIENS-in-XR, where all of the displays are ultimately represented with abstract software objects that expose given capabilities, e.g., a fixed screen size for a physical display or multiple form factors possible for a XR display, to present information to users.

- The XR-Displays-Handler software component is in charge with the creation, manipulation, and destruction of the software objects representing displays in the SAPIENS-in-XR architecture. It receives input from Attention-Detection to update the parameters of the XR.

Publications

- Cristian Pamparău, Ovidiu-Andrei Schipor, Alexandru Dancu, Radu-Daniel Vatavu. (2023). SAPIENS in XR: Operationalizing Interaction-Attention in Extended Reality. Virtual Reality (2023). Springer, 17 pages

Demo and source code

We introduce our online simulation application for peripheral interactions in XR, which enables practitioners to have access to JavaScript code and observe how JSON messages are being exchanged by the various software components of SAPIENS-in-XR.