4.1.

Design of the gesture dictionnary The design of the gesture dictionary will contain a serie of objectives, as follows::

§ proposal for a gesture dictionary including hand postures, gesture trajectories and head movements

§ gathering of voluntary users for collecting video data containg gesture commands

§ ergonomic studies with regards to the users' capacity of interaction by the means of gesture with an

information system and considerations on the aspect of complexity, naturalness, flexibility and efficiency of the proposed gestures.

Taking into account for example virtual reality systems, one can identify 3 types of commands in what concerns the possible

interactions:

§ general application commands (such as yes/no/menu activation, etc.)

§ commands for interacting with virtual objects (translation, rotation, scale, etc.)

§ commands for travel in the virtual environment (change of camera's point of view, travel inside a scene, zoom

operations, etc.)

General application commands do not address to objects or to the virtual environment but to the application in a general manner:

§ activate / inactivate the gesture interaction technology – a necessary gesture representing the

decision of using the interaction technology, decision that must be made known to the system

§

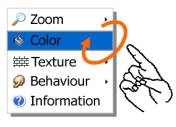

working with menus (a proposal consisting in 3 operations is presented in the picture below) – absolutely necessary in the existing WIMP metaphore (Windows, Icons, Menus, Point)

§ yes / no – there is the necessity for simple gestures to confirm/negate at several stages of interaction (validating an

action, answering to a question of the application, etc.)

§

undo / redo – simple gestures to annulate or to allow the remake of an operation.

|

|

|

Menu activation |

Selecting an option from a contextual menu attached to a virtual object |

Closing the menu |

Figure 1.

Gestures proposal for working with menus In what concerns the commands that relate to working with virtual objects, we can identify:

§ selection (simple or multiple) – see an example in the figure below

§ translation of a virtual object inside a scene

§ rotation of an object (considerations on wheter the rotation should be performed using one or two hands ?)

§

scale of a virtual objects

Figure 2.

Gestures proposal for selection / rotation of a virtual objectThe commands for travel inside a virtual environment are represented by (two examples make use of natural head movements as

presented in the picture below):

§ changement of the camera's point of view

§

travel along a direction previously chosen (normal speed, augmented speed, etc etc.)

§

zoom operations on the scene

Figure 3. Head movements to indicate change in the travel direction

4.2.

Visual acquisition of hand and head gestures For hand gesture detection and acquisition we propose a segmentation technique based on complementary informations obtained using:

§ color features (color based segmentation)

§ motion detection and background subtraction methods

§ derivate features (such as edges resulting from an edge detection process)

The accent is on color segmentation and on designing a dynamic adaptive filter for skin color detection using the HSV color

space (see next picture below). The current video frame is segmented using the following condition:

where the color thresholds for hue / saturation are automatically determined function of the particularities of the video frame to

be analysed (brightness, background objects, etc.).

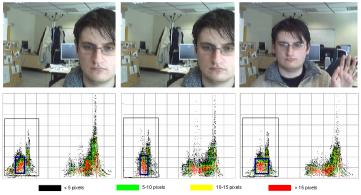

Figure 4. Three consecutive video frames and their associated 2D hue / saturation histogram

(Vatavu et.al, Advances in Electrical and Computer Engineering, 1/2005)

The necessity of this solution can be easily observed from the above pictures that presents 3 consecutives video frames. One

can easily observe the consistent variation that appears in what concerns the 2D hue / saturation histograms between

consecutive frames. The larger rectangle stands for the maximum limits the skin color may vary in and the smaller rectangle represents the actual skin color limits for the given video frame.

The pre processing algorithms for skin color detection are going to be firstly developed on desktop systems and we shall

consider a future implementation on a hardware based System on Chip architecture in order to reduce the total processing time

and releaving the main system of additional computation. We are considering a SoC architecture that will:

§ capture in real time video information using a camera attached to our device

§ process in real time the captured video frames using skin color and motion detection methods

§

transfer from the PC working parameters such as: frames per second, image resolution, compression type, etc.

§ transfer to the PC of the obtained results with regards to the motion detected areas or skin color regions of interest

The aim of the hardware architecture is to reduce the amount of processing power required on the desktop machine by

performing a series of preprocessing on specialized hardware devices. The architecture includes: (1) 32 bits microprocesor

(Xilinx Microblaze); (2) Ethernet interface for PC communcation; (3) UART interface and JTAG interface for debug operations; (4)

SDRAM/FLASH interface for data storage; (5) VGA/LCD graphical interface for displaying results; (6) USB interface for video

camera communication. The device presents a high level of novelty both on the implementation level and on the software

interfacing. Although current research in gesture recognition rapidly progresses, there are no references in the literature with regards to the existence of similar devices.

In what concerns face detection, an algorithm based on [Viola & Jones, 2001] will be developed with Haar based features and

Ada Boost learning methods. Boosting methods have the goal of enriching the individual performances of any learning

algorithms. The method has as input data a training set and a series of classifiers that are considered to be weak or with weak

performances. The goal is to combine all these weak classifiers into a strong one. The weak classifiers we are going to use or of the following type  , where , where  represents a Haar feature, represents a Haar feature,  a threshold and a threshold and  a parity indicator for the inequality sense. x represents a sample from the training set. The classifiers that are selected by the Ada

Boost method are going to be organized in a cascade structure where the positive result of a classifier triggers the computation

of the next one. The basic idea is that most of the video frames are going to be rejected by only computing a few classifiers.

Thus, the total computing time can be greatly reduced this way. We can train a simple classifier to posses an accuracy of

100% on the training set but in this way, the percent of false positives will be greater too. However, these false positives examples will be eliminated by the next levels of the cascade. a parity indicator for the inequality sense. x represents a sample from the training set. The classifiers that are selected by the Ada

Boost method are going to be organized in a cascade structure where the positive result of a classifier triggers the computation

of the next one. The basic idea is that most of the video frames are going to be rejected by only computing a few classifiers.

Thus, the total computing time can be greatly reduced this way. We can train a simple classifier to posses an accuracy of

100% on the training set but in this way, the percent of false positives will be greater too. However, these false positives examples will be eliminated by the next levels of the cascade.

4.3. The software architecture of the interaction system

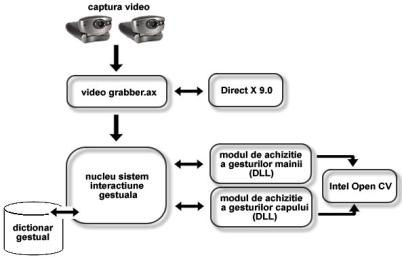

In order to implement the interaction system, the following modules will be developped:

§ a video capture module, implemented as a DirectShow filter (VideoGrabber.ax)§ a hand gesture acquisition module implemented as a DLL library (using OpenCV) § face and head movements module (DLL, using OpenCV) § the gesture dictionary

Figure 5. Software system architecture

The modules will be developed using Microsoft Visual Studio .NET 7.0, Microsoft DirectX 9.0 SDK and the image processing

library Intel OpenCV. In order to develop the application conforming to the new Microsoft standards in what concerns media

streaming on the Windows platforms, the DirectShow component of DirectX 9.0 will be used. DirectShow supports video capture

from WDM sources (Windows Driver Model) as well as from older devices using Video for Windows. Also, another advantage is

given by the automatic detection of video hardware acceleration. For the video capture module, a DirectShow filter will be

developed that can be used in the main filter graph, all with a very simple interface consisiting in a callback function that will deliver video frames to the main application. 4.4.

Several implementations of the gesture interaction system 4.4.1. Working in virtual environments

The gesture interaction system will be implemented for a collaborative virtual environment Spin3D currently under development at

LIFL Laboratoire d'Informatique Fondamentale de Lille. The goal is to assure a natural manipulation of the virtual objects

(translations, rotations, scale changes, changes of the virtual object's properties and behaviour) using similar gestures with the

ones proposed under paragraph 4.1. refeering to the gesture dictionnary. The gesture based interaction with virtual objects is

already the object of a PhD thesis conducted in the collaboration between University of Suceava and LIFL of Universite des Sciences et Technologies de Lille.

4.4.2. Working in an augmented reality The augmented reality application will use a video projector device that will project virtual objects on a real working table, next to

real objects (see the picture below). Work will be done with both real and virtual objects in the same manner, hence using the

same gestures (see the specifications of the gesture dictionanary as a very important part of the project proposal).

Figure 6. Working with virtual objects in an augmented reality applications

The virtual objects are projected on a real working table

4.4.3.

Interfacing with a static arm type robotic system The robot system Hercules has beed developed at the University of Suceava for several years now as a computer vision system.

It is an arm type robot with pincers that allows executing commands in an action sphere of 501mm range. Commands are sent

to the robot by an image processing application developed at the University of Suceava. The system is composed of a visual

analysis component that processes objects from the robot's working area and issues commands for grabbing and moving them.

The research will be conducted for the introduction of a gesture based interface for controlling the robot taking into account its specific type of operations. 4.4.4.

Interfacing with a mobile robotic system The mobile robot Centaure is the result of the collaboration between the University of Suceava and the automatics laboratories of

Polytech'Lille of the Universite des Sciences et Technologies de Lille. The robot is capable of patrolling a previously defined

perimeter and to gather information of the environment. The specifications of the Centaure robot include: rectangular base of

70cm x 40 cm; wheels placed on the inside with traction on the back: two 20 cm wheels and two small joecky type wheels;

speed controller for 12/24V motors; OOPic-R microcontroller; position sensors; contact sensors; infrared sensors; infrared

markers for the patrolling perimeter; video camera. The research will be conducted towards a gesture command inteferace

taking into considerations specific gesturs (waving for getting attention, launching commands, changing travel directions, etc.). 4.5. The novelty and the complexity of the proposal

consist in:

§

designing a gesture dictionary that will become a standard for the gesture based interface, taking into account the ergonomicity, naturleness, flexibility and the easiness of memorizing gestures.

We must consider the fact that there are in the literature proposals for gestures for specific actions but there is not any attempt of standardizing on a common interactional gesture dictionanary

§

analyzing the gesture trajectories by correlating the visual information from two video cameras. There are attempts

and approaches in what concerns stereoscopic analysis but they are centred on simple and specific actions. The

proposal aims at embedding inside the same analysis following of the hand trajectories as well as of the head's

movements in a viable model that will posses the requirements of a interaction interface, positioning itself in the trend of current computer vision top research

§ experimenting and validating the system by implementing it for 4 different scenarios

(inside a virtual reality system for virtual objects manipulations, inside an augmented reality system, for commading the static arm type robot Hercules and for controlling the mobile robot Centaure)

|